Free Cursor AI Alternative: VS Code + DeepSeek R1 + Ollama Setup

Among the subscription-based AI coding assistants, tools like Cursor, Windsurf, and GitHub Copilot are truly excellent. However, the monthly subscription fees can be a burden.

Today, I’m sharing how to use an AI editor for free by combining the VS Code Cline extension with ollama and DeepSeek R1.

Cline is an open-source AI coding assistant extension available for VS Code. It offers a variety of features that help with user commands and code generation, significantly boosting development productivity.

Other VS Code extensions offering similar functionalities include Continue and Roo Code. All of these are fairly well-tested tools with active communities.

Their basic setup procedures do not differ much, so you can choose the program that best suits your personal workflow. Note that Cline does not have a tab auto-completion feature, so combining Cline with Continue is also an option.

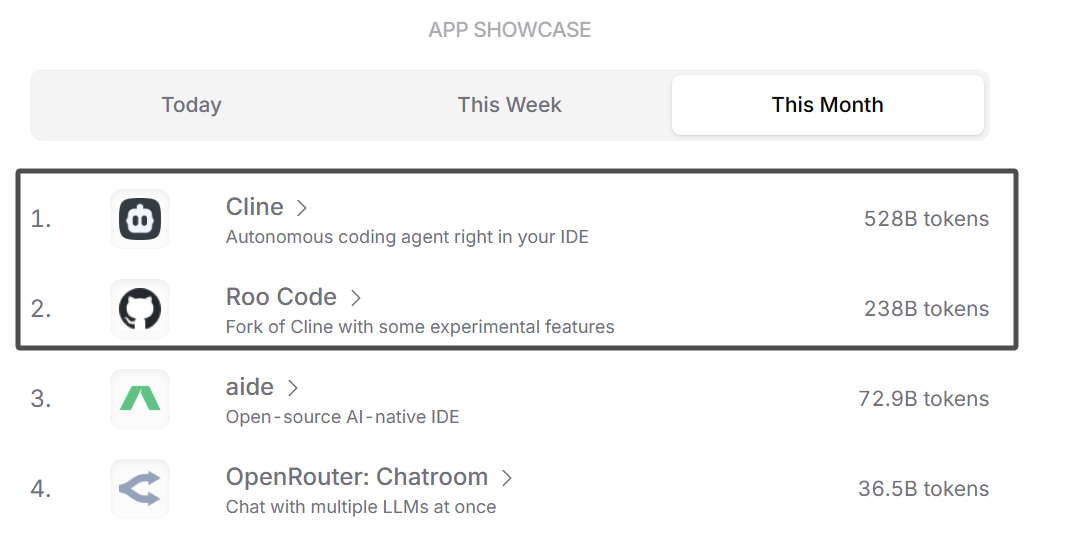

According to the token usage rankings on openrouter.ai , the token usage for Cline and Roo Code far exceeds that of others.

Cline,

Continue

Roo Code (prev. Roo Cline)

Running DeepSeek R1 Locally

Why Run Locally?

- Free and Low-Cost Operation: While Cursor AI incurs a monthly fee, Cline is available as open source and DeepSeek R1 can be run locally, allowing you to leverage powerful AI assistance without additional costs.

- Data Security: All AI computations occur on your local machine, ensuring that your code and related data are not transmitted to external servers, which greatly enhances privacy and security.

- Faster Response Times: Since DeepSeek R1 runs directly on your hardware, you avoid network latency and can expect lower delay compared to API call methods.

However, note that lightweight distilled models for local execution do not perform as well as full-parameter API models. For most developers, using an API platform—especially for non-English languages like Korean—might still be more practical. Running AI models locally should be approached with an experimental mindset.

Installing Ollama

Ollama is a tool that makes it easy to run large language models like DeepSeek R1 locally.

- Download and install Ollama from the Ollama official website.

Downloading the Desired DeepSeek R1 Model

Choose and download the model that suits your PC’s specifications.

For example, for the 7B model:

# https://ollama.com/library/deepseek-r1

# ollama run deepseek-r1:1.5b

ollama run deepseek-r1:7b

# ollama run deepseek-r1:8b

# ollama run deepseek-r1:14b

# ollama run deepseek-r1:32b

# ollama run deepseek-r1:70b

Once the model download is complete, it will automatically run locally.

pulling manifest

pulling 96c415656d37... 100% ▕████████████████████████████████████████████████████████▏ 4.7 GB

pulling 369ca498f347... 100% ▕████████████████████████████████████████████████████████▏ 387 B

pulling 6e4c38e1172f... 100% ▕████████████████████████████████████████████████████████▏ 1.1 KB

pulling f4d24e9138dd... 100% ▕████████████████████████████████████████████████████████▏ 148 B

pulling 40fb844194b2... 100% ▕████████████████████████████████████████████████████████▏ 487 B

verifying sha256 digest

writing manifest

success

>>> hello?

<think>

</think>

Hello! How can I assist you today? 😊

>>>

You can access the locally running model at http://localhost:11434.

VS Code and Cline Setup

Installing the VS Code Extension

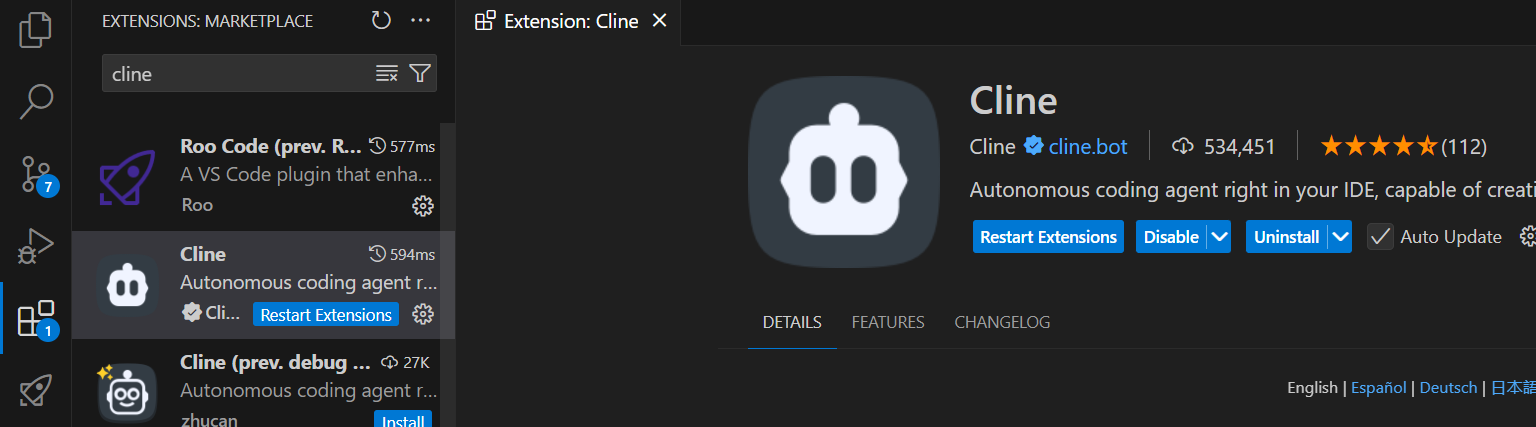

Search for Cline in the VS Code Marketplace and install it.

Connecting Cline to Ollama (Local DeepSeek R1)

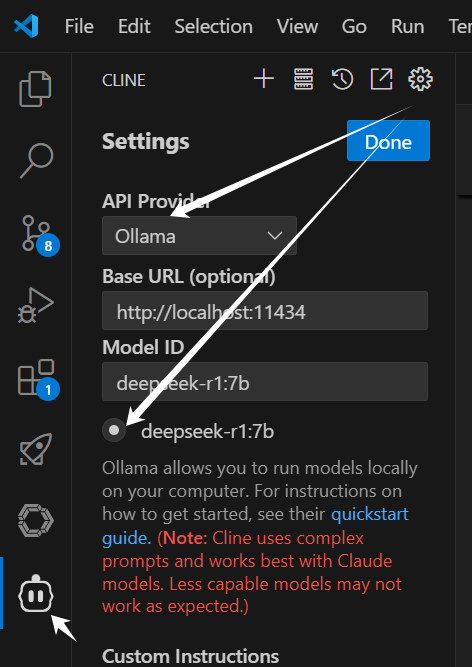

- Open Cline settings in VS Code.

- Select

Ollamafrom the API Provider list. - Enter http://localhost:11434 in the

Base URLfield and select the running DeepSeek R1 model (e.g.,deepseek-r1:14b) from the model selection dropdown.

After successful local deployment of the DeepSeek model, a model selection window will automatically appear below the Model ID after entering the

Base URL.

If you encounter an

MCP hub not availableerror after sending a message, restarting VS Code should resolve the issue. Reference GitHub Issue

Testing

Once setup is complete, test Cline by entering a prompt to confirm that it responds correctly.

If, after entering a prompt, your CPU is working at full throttle with a very slow or even no response, it indicates that your PC’s specifications might be insufficient for running that model. In such cases, we recommend testing with a lower model version.

API Integration Method

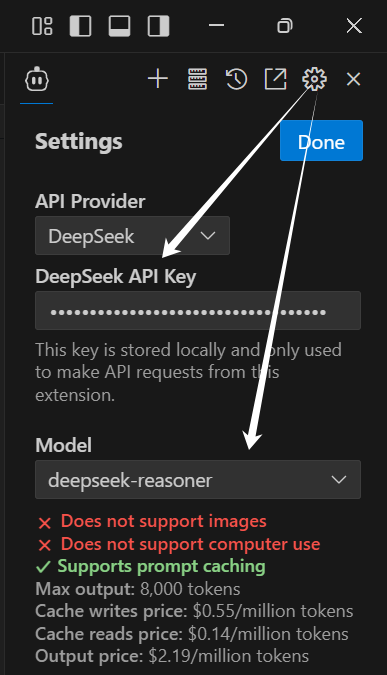

- Obtain a DeepSeek API key from the DeepSeek official website.

- Open Cline settings in VS Code, select

DeepSeekfrom the API Provider list, enter yourAPI Key, and select the modeldeepseek-reasoner.

Now, you too can experience AI coding assistance at the level of Cursor—all for $0 a month! If you encounter any issues during setup, refer to the official Ollama documentation or the Cline GitHub issues page for help.