High-Availability Nginx Load Balancing with Keepalived and HAProxy

This article introduces how to set up a high-availability load balancing architecture for Nginx using the combination of Keepalived and HAProxy.

First, let's see what are the problems or drawbacks when using Keepalived or HAProxy alone.

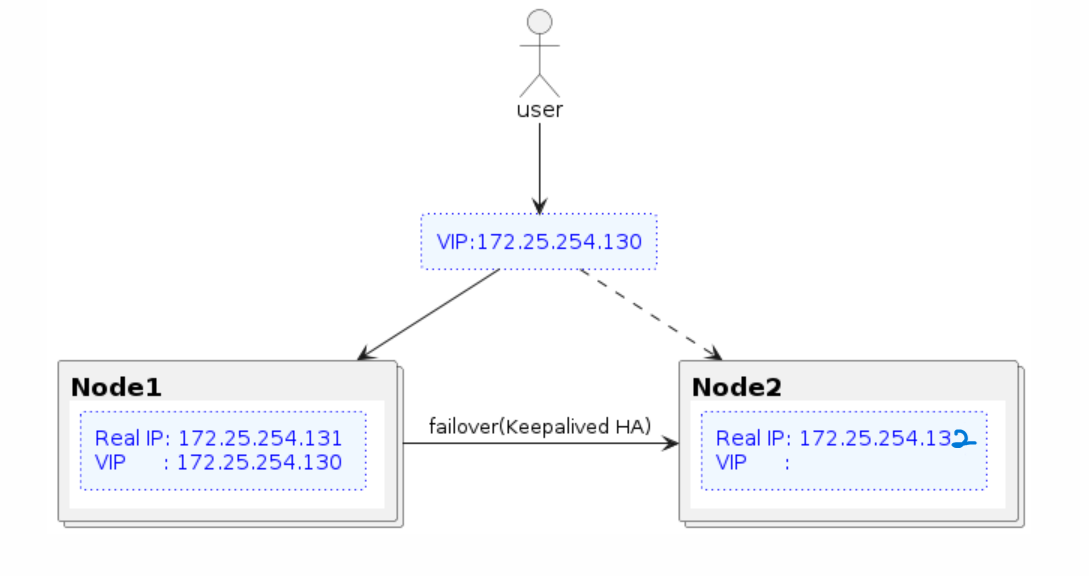

1. Nginx + Keepalived :

This approach has the drawback of inefficient resource utilization and is suitable only for low-throughput scenarios. Without using LVS, we can only implement an Active-Passive architecture. Since the backup server has to stay online, why not build an SLB (Server Load Balancer) with an Active-Active architecture?

While the Keepalived + LVS method can achieve load balancing, LVS operates at Layer 4 (Transport Layer) and has limited functionality. HAProxy, with its application-layer load balancing capabilities, offers a clear advantage.

2. Nginx + HAProxy :

This approach suffers from a single point of failure. If the single HAProxy server goes down, the entire service becomes unavailable.

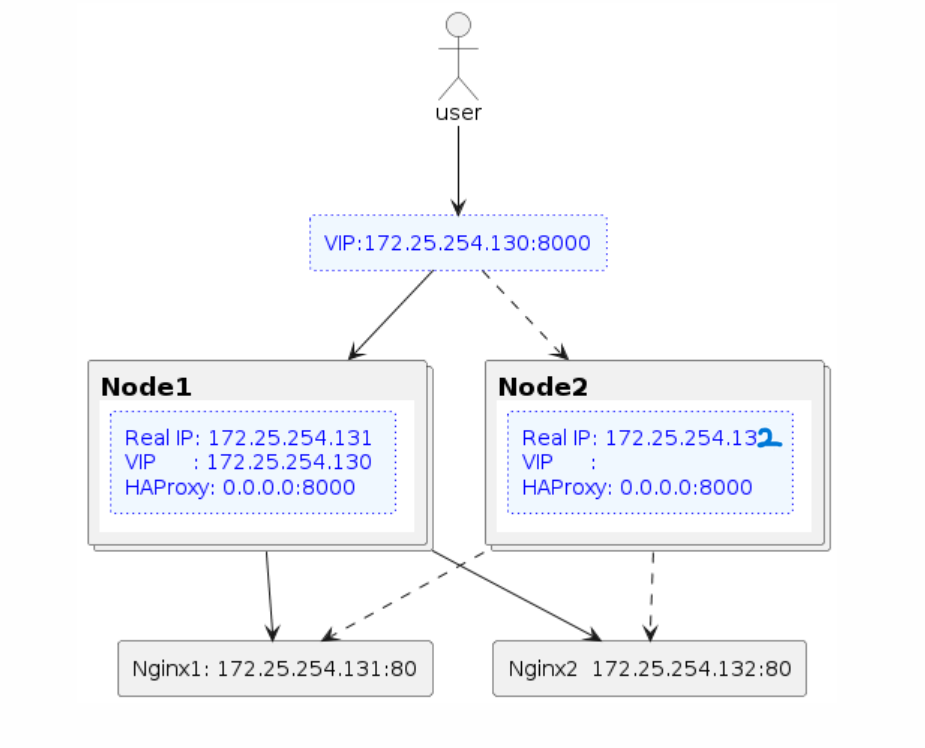

3. Nginx + Keepalived + HAProxy :

This setup supports high concurrency and addresses the single point of failure issue.

To understand the features of Keepalived and HAProxy, we will implement the architecture in two steps:

- Use Nginx + Keepalived to achieve a high-availability Active-Passive architecture.

- Extend this with HAProxy to achieve high-availability load balancing.

Prerequisites

OS: Ubuntu 22.04.4 LTS

Nginx: 1.18.0

Keepalived: v2.2.4

HAProxy: 2.4.24

| No. | host name | IP | node roles |

|---|---|---|---|

| #1 | svr1 | 172.25.254.131 | Keepalived(master), Nginx, HAProxy |

| #2 | svr2 | 172.25.254.132 | Keepalived(backup), Nginx, HAProxy |

Configuring keepalived for Active-Passive HA

In the first phase, the architecture aims to allow users to access Nginx through a Virtual IP (VIP). If server #1 fails, the VIP will switch to server #2, ensuring service continuity.

install

sudo apt-get update

sudo apt-get install keepalived

sudo apt-get install nginx

Edit the Keepalived configuration file on both servers.

Server #1 Configuration

sudo vim /etc/keepalived/keepalived.conf

global_defs {

router_id nginx

}

# Nginx health check script

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

interval 2

weight 50

}

vrrp_instance VI_1 {

# Initial instance state (MASTER or BACKUP)

state MASTER

# Network interface for VRRP

interface ens33

# Virtual router ID (0-255)

virtual_router_id 62

# Priority, higher priority becomes master

priority 151

# VRRP advertisement interval (in seconds)

advert_int 1

# Non-preemptive mode, allows a lower-priority node to remain master

nopreempt

# Source IP for VRRP unicast

unicast_src_ip 172.25.254.131

# Peer IP for VRRP unicast

unicast_peer {

172.25.254.132

}

# Authentication for node communication (must match on both nodes)

authentication {

auth_type PASS

auth_pass 1111

}

# Virtual IP

virtual_ipaddress {

172.25.254.130

}

# Health check script

track_script {

check_nginx

}

}

Server #2 Configuration

sudo vim /etc/keepalived/keepalived.conf

global_defs {

router_id nginx

}

# Nginx health check script

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

interval 2

weight 50

}

vrrp_instance VI_1 {

# Initial instance state (MASTER or BACKUP)

state BACKUP

# Network interface for VRRP

interface ens33

# Virtual router ID (0-255)

virtual_router_id 62

# higher priority becomes master

priority 150

# VRRP advertisement interval (in seconds)

advert_int 1

# Non-preemptive mode, allows a lower-priority node to remain master

nopreempt

# Source IP for VRRP unicast

unicast_src_ip 172.25.254.132

# Peer IP for VRRP unicast

unicast_peer {

172.25.254.131

}

# Authentication for node communication (must match on both nodes)

authentication {

auth_type PASS

auth_pass 1111

}

# Virtual IP

virtual_ipaddress {

172.25.254.130

}

# Health check script

track_script {

check_nginx

}

}

Create the following script on both servers to monitor the status of Nginx:

sudo vim /etc/keepalived/check_nginx.sh

#!/bin/sh

if [ -z "`/usr/bin/pidof nginx`" ]; then

systemctl stop keepalived

exit 1

fi

Add execution permissions:

sudo chmod +x /etc/keepalived/check_nginx.sh

Starting Services

Start Nginx and check its status:

sudo systemctl start nginx

systemctl status nginx

● nginx.service - A high performance web server and a reverse proxy server

Loaded: loaded (/lib/systemd/system/nginx.service; enabled; vendor preset: enabled)

Active: active (running) since Tue 2024-05-07 07:22:43 UTC; 37s ago

Docs: man:nginx(8)

Process: 205677 ExecStartPre=/usr/sbin/nginx -t -q -g daemon on; master_process on; (code=exited, status=0/SUCCESS)

Process: 205678 ExecStart=/usr/sbin/nginx -g daemon on; master_process on; (code=exited, status=0/SUCCESS)

Main PID: 205679 (nginx)

Tasks: 3 (limit: 4515)

Memory: 3.3M

CPU: 52ms

CGroup: /system.slice/nginx.service

├─205679 "nginx: master process /usr/sbin/nginx -g daemon on; master_process on;"

├─205680 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ""

└─205681 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ""

May 07 07:22:43 svr1 systemd[1]: Starting A high performance web server and a reverse proxy server...

May 07 07:22:43 svr1 systemd[1]: Started A high performance web server and a reverse proxy server.

Start keepalived and check its status:

sudo systemctl start keepalived

systemctl status keepalived

● keepalived.service - Keepalive Daemon (LVS and VRRP)

Loaded: loaded (/lib/systemd/system/keepalived.service; enabled; vendor preset: enabled)

Active: active (running) since Tue 2024-05-07 07:25:20 UTC; 18s ago

Main PID: 205688 (keepalived)

Tasks: 2 (limit: 4515)

Memory: 2.0M

CPU: 265ms

CGroup: /system.slice/keepalived.service

├─205688 /usr/sbin/keepalived --dont-fork

└─205689 /usr/sbin/keepalived --dont-fork

May 07 07:25:20 svr1 Keepalived[205688]: Starting VRRP child process, pid=205689

May 07 07:25:20 svr1 systemd[1]: keepalived.service: Got notification message from PID 205689, but reception only permitted for main PID 205688

May 07 07:25:20 svr1 Keepalived_vrrp[205689]: WARNING - default user 'keepalived_script' for script execution does not exist - please create.

May 07 07:25:20 svr1 Keepalived_vrrp[205689]: SECURITY VIOLATION - scripts are being executed but script_security not enabled.

May 07 07:25:20 svr1 Keepalived[205688]: Startup complete

May 07 07:25:20 svr1 systemd[1]: Started Keepalive Daemon (LVS and VRRP).

May 07 07:25:20 svr1 Keepalived_vrrp[205689]: (VI_1) Entering BACKUP STATE (init)

May 07 07:25:20 svr1 Keepalived_vrrp[205689]: VRRP_Script(check_nginx) succeeded

May 07 07:25:20 svr1 Keepalived_vrrp[205689]: (VI_1) Changing effective priority from 101 to 151

May 07 07:25:24 svr1 Keepalived_vrrp[205689]: (VI_1) Entering MASTER STATE

After starting Keepalived, verify that the VIP is assigned on the master server.

#1 Server (Master)

ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:a1:d7:ea brd ff:ff:ff:ff:ff:ff

altname enp2s1

inet 172.25.254.131/24 brd 172.25.254.255 scope global ens33

valid_lft forever preferred_lft forever

inet 172.25.254.130/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fea1:d7ea/64 scope link

valid_lft forever preferred_lft forever

#2 Server (Backup)

ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:5c:c4:91 brd ff:ff:ff:ff:ff:ff

altname enp2s1

inet 172.25.254.132/24 brd 172.25.254.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe5c:c491/64 scope link

valid_lft forever preferred_lft forever

Failover Testing

Stop Nginx on the master server and check if failover occurs:

sudo systemctl stop nginx

Verify that the VIP is now assigned to the backup server.

#1 Server (Master)

ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:a1:d7:ea brd ff:ff:ff:ff:ff:ff

altname enp2s1

inet 172.25.254.131/24 brd 172.25.254.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fea1:d7ea/64 scope link

valid_lft forever preferred_lft forever

#2 Server (Backup)

ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:5c:c4:91 brd ff:ff:ff:ff:ff:ff

altname enp2s1

inet 172.25.254.132/24 brd 172.25.254.255 scope global ens33

valid_lft forever preferred_lft forever

inet 172.25.254.130/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe5c:c491/64 scope link

valid_lft forever preferred_lft forever

The VIP should transition seamlessly to the backup server.

Adding HAProxy for Active-Active Load Balancing

Building upon the previous architecture, introduce HAProxy to enable active-active load balancing.

Install HAProxy on both servers:

sudo apt-get install haproxy

Edit the HAProxy configuration file:

sudo vim /etc/haproxy/haproxy.cfg

global

log /dev/log local0 info

log /dev/log local1 warning

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listeners

stats timeout 30s

user haproxy

group haproxy

maxconn 60000

daemon

defaults

log global

mode http

option httplog

option dontlognull

retries 3

timeout http-request 10s

timeout connect 3s

timeout client 10s

timeout server 10s

timeout http-keep-alive 10s

timeout check 2s

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

frontend http-in

bind *:8000

maxconn 20000

default_backend servers

backend servers

balance roundrobin

server server1 172.25.254.131:80 check

server server2 172.25.254.132:80 check

Testing Load Balancing and High Availability

Perform tests to ensure HAProxy and Keepalived are functioning as expected with active-active load balancing across the two servers.

while sleep 1 ; do curl http://172.25.254.130:8000 ; done